Judicata automated review scores brief's lines of attack

Artificial intelligence won’t write a brief, but it can do much more than spell-check a lawyer’s work. Legal-research startup Judicata performs a range of tests in its new automated service, from verifying direct quotations to probing for weak points in legal arguments.

This week, Judicata is scheduling its first demonstrations of “Clerk,” its new markup tool. Clerk is, currently, only available for California cases, and some subscribers of its online California case citator were included in initial tests.

Judicata, whose venture funders include PayPal co-founder Peter Thiel, plans to expand the service to federal and additional state jurisdictions. In an interview with the ABA Journal, CEO Itai Gurari gives no timeline for such an expansion.

When a subscriber uploads a brief, the Judicata website assigns percentage grades based on the citations’ strength as precedent, the verbatim use of quoted text and the cases’ typical outcomes. Gurari says this analysis goes beyond confirming whether citations represent good law.

“It’s also mapping the text to our knowledge of cases, our knowledge of arguments that have worked,” Gurari says. The review evaluates the strength of a brief relative to others advancing a similar viewpoint or presented to the same judge. The review also notes how often like cases were appealed and what happened at the appellate level.

A sample report flags citations vulnerable to being distinguished as materially different, and notes where quotations vary from the opinion’s language. The robot review also names cases not cited in the brief, representing either opposing arguments to rebut or successful strategies to review. The report includes statistics relating to both the cause of action and the judge hearing the case.

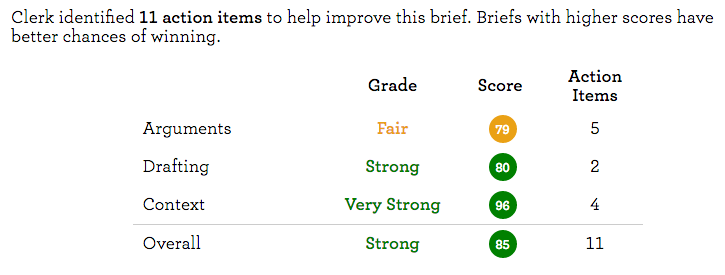

The Clerk markup tool assigns grades to legal argument, drafting accuracy and resolution of similar cases.

“This is not a predictive tool,” says product manager Beth Hoover. As the stockbrokers warn, past performance does not predict future returns. Hoover says the approach is more like grading a high-school paper for grammar, clarity and focus. “An argument should be well expressed,” Hoover says. “We’re helping people to see how to improve what they’ve written.”

It won’t create a successful strategy for you, but Clerk might help make the best of a tactical challenge. “If you’re in a sticky situation, the score you can get is pretty limited,” Hoover says. “The context of how cases fare may be less important than the argument itself.” In a blog post, she runs the software on a previously resolved property rights filing.

Judicata CEO Itai Gurari.

Computer-aided legal research tends either to track disposition of cases or look for similarities among texts. By using aspects of both approaches, the Clerk tool is something of a Swiss Army knife with many uses. Paralegals may find the drafting aids useful, Hoover says, while associates may benefit from the argument prompts.

Gurari claims a sports precedent for his scoring approach. In the Michael Lewis book Moneyball, baseball executive Billy Beane employs data to assemble a competitive team. “The story of Moneyball is that there are statistics that correlate with better performance,” Gurari says.

In a blog post, Gurari argues that briefs that score better on his three factors of argument, drafting accuracy and conforming context have a better chance of winning on average. Judicata presents no statistics to back this assertion, and Gurari allows that the variation may indicate correlation rather than causation.

He tells ABA Journal he plans to post a comparison of BigLaw briefs, which would show that lawyers who prevail tend to follow certain best practices.

An average California case, he writes in the blog post, touches on on 2 percent of the state’s case law. As a result, grading for adherence to these practices would be difficult without computer aid.

Briefs are not retained on the Judicata servers, says lead software engineer Ben Pedrick. Users must download a PDF to review the software’s annotations later, or else resubmit the file.

Clerk will be an additional subscription service. Gurari says the markup tool will have a higher price point based on user headcount, but he declined to give a price range.

Write a letter to the editor, share a story tip or update, or report an error.