The dangers of digital things: Self-driving cars steer proposed laws on robotics and automation

Shutterstock

During the first season of the HBO series Silicon Valley, a main character waiting for his ride home is stunned when a car pulls up and the driver’s seat is completely empty—except for a few metal boxes with wires and cords that connect who knows what to who knows where. He gives his home address to the voice-activated system controlling the car, then sits back for a smooth, safe, uneventful ride.

That is, until a computer override kicks in. The car takes a detour to the docks, where it enters a shipping container so it can be ferried to a billionaire’s self-made island in the middle of nowhere. Several days later, the passenger makes it back home, but all he has is a story he’ll look back on and a hard-earned life lesson: Don’t get into cars with strange robots.

See our gallery: 6 times smart devices were outsmarted

This episode underscores several important issues that relate to increased reliance on robotics, artificial intelligence and automation: As robots, computers and software become more ubiquitous in our everyday lives, performing tasks that used to be the sole province of humans, it’s fair to wonder whether the laws and regulations designed to protect those humans are sufficient or whether new laws are necessary.

It’s also a question that surrounds what has become known as the internet of things, an ever-growing collection of products connected to computer systems and the wireless web. Like cash machines, credit-card readers and PCs, those links can provide portals allowing unauthorized people to hack into systems and cause no end of mischief and mayhem.

“The machines are here, but the law isn’t,” says Ed Walters, CEO at Fastcase. “We need to start figuring how to start regulating robots right now. This isn’t science fiction; this is science present.”

Driverless car. Associated Press photo.

NO NEW THING

Driverless cars have existed, in some form, for decades. Going back to the 1920s, automotive manufacturers have worked on or created several different types of cars that are controlled by something other than a driver. Likewise, the American legal system has long contemplated automated vehicles. In 1991, Congress passed a wide-ranging transportation bill that included a provision calling for the “development of a completely automated highway and vehicle system” with testing scheduled to commence—in 1997.

In the ensuing decades, car manufacturers began to provide features that did not require a driver or operator, including automatic parallel parking, smart brakes and collision-avoidance systems.

Then in 2009, Google announced it would develop a fully autonomous self-driving car and began testing its vehicles the following year. By 2015, Google had started testing its Waymo cars in urban areas, including Mountain View, California, and Austin, Texas.

In the meantime, other manufacturers were getting in on the act. Uber partnered with Carnegie Mellon University to deploy self-driving cars in Pittsburgh. Ford, Mercedes-Benz, General Motors, Toyota, Nissan, Hyundai and others have announced plans to develop their own versions. In 2014, Tesla introduced an autopilot function in its cars, and company CEO Elon Musk predicted that by 2019 humans would be able to sleep through an entire car trip without anyone having to stay awake and operate the steering wheel.

Indeed, falling asleep at the wheel and other crash-causing human activity have been among the biggest drivers of the push to cede autonomy over driving to computers. According to the National Highway Traffic Safety Administration, from 1995 to 2015, there was an average of about 35,000 fatal car crashes each year. The NHTSA also revealed in a 2015 study that drivers were at fault for about 94 percent of all car crashes from 2005 to 2007.

“We’ve made roads better and made cars safer,” says Damien Riehl, vice president at Stroz Friedberg, a data-breach incident-response company. “But people are still drinking and driving, and there are still inexperienced people driving, texting and not paying attention. I’m afraid of robot cars, but I’m terrified of human drivers.”

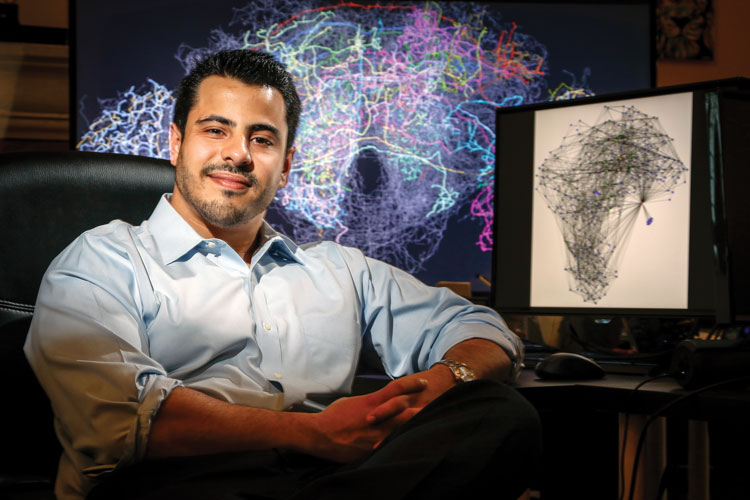

Photo of Andrew Arruda by Tony Avelar.

Andrew Arruda, CEO at Ross Intelligence, says safety isn’t the sole benefit that autonomous cars provide. “Driverless cars will save millions of lives, prevent millions of pounds of carbon emissions, and give trillions of hours of time back to the human race,” he says.

According to Arruda, it’s typical for people to resist new technology, only to adopt it and then take it for granted. “There was also resistance when automatic elevators came to market,” he says.

There will be other benefits. Martin Tully, co-chair of Akerman’s data law practice, says gigantic parking structures will become obsolete when people no longer have to park in close proximity to their location. Simply put, the commuter accustomed to driving to the train station and parking in the lot all day either can direct the car to go home and power down or instruct it to pick up other passengers.

“Meanwhile, if you look at zoning laws, typically you need to have so many parking spaces per unit,” Tully says. “Those numbers are coming down because of driverless cars and ride-sharing. That’s already happening.”

PLAYING CATCH-UP

But the rapid development of autonomous cars has caught legislators asleep at the wheel.

“The technology is ahead of the law in many areas,” Bernard Lu, senior staff counsel at the California Department of Motor Vehicles, told the New York Times in 2010, right after Google began to test its driverless cars. “If you look at the vehicle code, there are dozens of laws pertaining to the driver of a vehicle, and they all presume to have a human being operating the vehicle.”

Since then, more than 40 states and the District of Columbia have introduced laws addressing autonomous vehicles, according to the National Conference of State Legislatures, with about half those states passing some sort of law that relates to driverless cars.

Some of the laws and executive orders—including those in Utah, Washington and Massachusetts—only cover testing and other early-stage matters, including information gathering and establishing proposed standards. Other states, including New York and Connecticut, have been unwilling to give complete control to robots and have imposed restrictions, including requiring a driver on standby behind the steering wheel at all times.

On the other hand, there’s Michigan—the mother of American car culture. The state made waves in late 2016 for enacting what many commentators called a sweeping and highly permissive set of laws that relate to driverless cars. For example, automated cars don’t have to have a human operator behind the wheel to access public roads in the state.

“One of the main issues for us was whether or not we should know everything that’s going on in the car,” says Kirk Steudle, director of the Michigan Department of Transportation.

He says an early version of the legislation kept getting bogged down in complex details. Additionally, Steudle says, he was concerned that having an overly cumbersome law would make it difficult to adapt to changes in technology.

The state decided to go for a more open-ended, less restrictive set of laws based on the premise that manufacturers and tech companies would abide by the NHTSA’s Federal Automated Vehicles Policy.

This article was published in the March 2018 issue of the ABA Journal with the title "The Dangers of Digi-things: Writing the laws for when driverless cars (or other computerized products) take a wrong turn."