By RAMON ABADIN AND CASEY FLAHERTY

The lecture-style info dump is an excellent way to teach. It is often a horrible way to learn.

From law’s cherished Socratic method to the modern flipped classroom, there has long been a recognition that listening to a lengthy monologue is not always an optimal mechanism for acquiring information, let alone practical skills.

The lecture offers many benefits beyond its economy—the ability to simultaneously convey a message to a large group of people. An engaging speaker, especially one armed with a few arresting visuals, has a unique opportunity to build a narrative and convey emotion. From rousing political speeches and hilarious stand-up routines to thought-provoking TED Talks and effective closing arguments, the basic lecture format has much to recommend it for certain types of presentations. Just not all.

Ramon Abadin.

If the mission is to highlight a few major themes, provide some main takeaways, or persuade a group of people to subvert their pre-existing perspective on a particular issue, lectures can be an excellent medium (when done well). If the goal is to convey large quantities of detailed information or impart a practical skill, then the lecture format is ill suited to the purpose.

Because lectures are so economical, most mandatory continuing legal education (MCLE) is presented in the lecture format irrespective of the learning objective. There is no hard data on whether most lawyers actually pay attention, let alone retain anything from MCLE lectures. But the anecdotal data suggests not so much (PDF). The siren call of work—a primary reason CLE is made mandatory in the first place—often proves too strong. One of law’s open secrets is that, economical though they may be, many MCLE lectures deliver limited value.

But the economics and logistics of pedagogy have changed. New tools to measure learning outcomes make a competence-based regime feasible. Previously, any kind of assessment of learning outcomes would have been time- and cost-prohibitive. Now, it is exceedingly simple for a machine to administer and immediately grade an assessment. Some online CLEs have therefore started interspersing lecture content with questionnaires to ensure engagement.

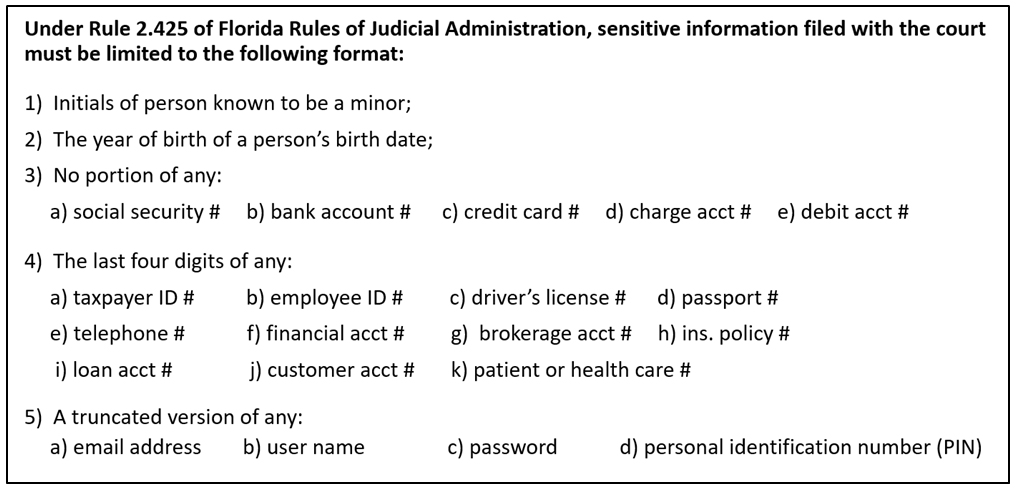

But competence-based CLE should not be thought of simply as a way to test whether lecture material is retained. Competence-based CLE provides a completely different way to deliver content and engage learners. Take, for example, the following slide:

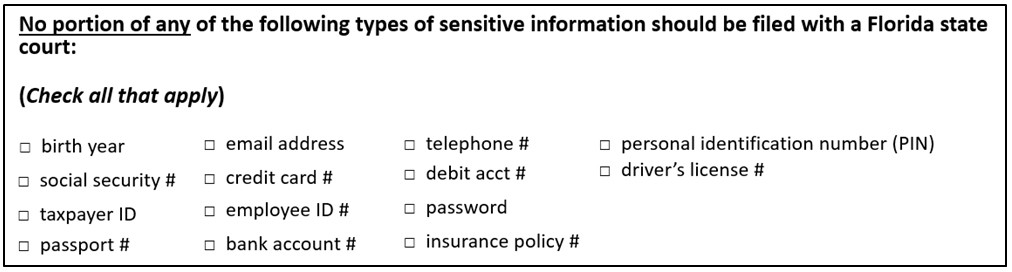

In the lecture format, the lecturer will usually run through a slide and, if the audience is lucky, provide some insight and commentary. While the slide conveys salient information, it is dense. Any learner, especially a passive one, is unlikely to recall many details. Depending on how much additional material is presented, the passive learner might be lucky to take away that Florida has a rule somewhere governing sensitive information filed with the court. It would, however, be simple enough to reinforce this takeaway with a follow-on assessment question like:

This learn-then-test technique is already in use, as with the California Bar’s self-study/self-assessment offerings. Yet the assessment question is valuable in its own right—i.e., without a lecture or any pre-packaged study material. The assessment as sole deliverable can still be a stellar learning tool.

An MCLE participant who is current on the issues presented should not have to waste time ‘learning’ that which they already know. But in a time-oriented regime, you don’t get to fast-forward and move on to more challenging and educational content. In a pure assessment approach, however, the participant can “fast forward” by quickly answering the question correctly.

And where they don’t already know the answer, participants have to do something else: engage with the substance of the material. The participant following the assessment route would, for example, be highly unlikely to simply know the answer to the question above. Instead, they would need to look it up.

Casey Flaherty.

That is, they will:

1) Read and think about the question

2) Research the answer

3) Reach the correct conclusion

In short, precisely what lawyers do all the time in their practice.

There is a stark difference in the engagement demanded by

(a) passive receipt of the slide (assuming participant is even paying attention) and

(b) actively solving the problem posed.

While superior for retention, active learning still does not mean that the participant will forever have perfect recall of the details.

But they are far more likely to retain a general sense of both the content of the rule and—this is important—how to locate the rule when they need to refer to it.

This kind of competence-based CLE is not a mini bar exam.

• First, it is optional—it is a learning alternative that expands choice.

• Second, the material is neither prescribed nor uniform. Lawyers still get to select which CLE they pursue.

• Third, it is open universe. Rather than memorization, it is assessing the ability to think critically about legal questions, perform the appropriate research, and come to correct conclusions.

• Fourth, the administering software can provide immediate feedback on whether a question was answered correctly. Participants don’t get one shot, they get as many opportunities as they need to reach a correct answer.

• Fifth, it need not be timed (though it could be). Participants can start and stop a digitally administered assessment at their convenience and take however long they need to reach the right answer.

The idea of assessments always raises the specter of cheating/gaming. Couldn’t the person just randomly click answers until they found the right one by happenstance? In theory. But it might take a while. Look again at the way the example question is structured. It is not multiple-choice. It is check-all-that-apply. With 14 answer options, there are 16,384 unique answer combinations. Guessing would demand far more effort than finding the correct answer.

Moreover, the question structure also lends itself well to minor modifications. The author could produce dozens of variations of essentially the same question by tweaking the emphasis (e.g., categories of sensitive information) and response options. If there were 10 variations for each of 20 questions on an assessment, the machine would be able to generate 1020 (100 quintillion) unique assessments. Creating an answer key would be near impossible without access to the question repository. And even if it existed, navigating a cheat sheet would be more work than arriving at the answer through standard means (e.g., Google).

Machine administration changes the nature of the assessment process. It also opens up additional possibilities. Imagine if the question about filing sensitive information with the court was then followed by a task in which the participant needed to properly redact sensitive information from a document. The participant would download the document, redact the sensitive information, and upload the edited document for the machine to grade. That is, the approach would not only assess substantive knowledge but also the attendant practical skills.

We wrote “imagine.” You won’t have to for long. The state bars of Florida and Minnesota, which get credit for being first, are already developing precisely the kind of competence-based CLE described above. If as a member of either bar you find the concept of competence-based CLE off-putting, don’t worry.

It’s optional. Those bar members who think they will get more out of competence-based MCLE, however, are welcome to pursue it.

In Florida, competence-based CLE will be optional. But technology, the topic of the Florida Bar’s initial competence-based CLE module, is not. Florida is the first state to mandate technology-related CLE, the topic of our next post.

Ramon Abadin is the immediate-past president of the Florida Bar and partner at Sedgwick. Casey Flaherty is of counsel and director of client value at Haight Brown & Bonesteel and serves on the advisory board of Nextlaw Labs. Casey is also the founder of the legal operations consultancy Procertas and the creator of the Legal Technology Assessment, which (full disclosure), will power the competence-based CLE offering described in the foregoing article.

Updated Nov. 18 to correct a calculation error introduced in the editing process.